The Ethics of AI: Keeping Humans in Control

Algorithms now quietly determine credit scores, job prospects, and even criminal sentencing recommendations. Artificial intelligence has transitioned from theoretical research to consumer reality with breathtaking speed.

We see this influence daily in ubiquitous tools like ChatGPT and autonomous driving systems. This rapid adoption demands we define AI ethics as a necessary system of moral principles to guide responsible development.

A distinct friction exists at the heart of this progress. Developers push for raw speed and efficiency to capture markets.

Yet society requires safety, civil rights, and human dignity. The central challenge lies in advancing these powerful systems without sacrificing the values that make us human.

We must ensure the code serves the people rather than the other way around.

Core Pillars of Ethical AI Systems

Building trust in artificial intelligence requires more than just functional code. It demands a rigorous ethical framework designed to protect individuals and society from potential harm.

These systems wield immense power over daily life. Consequently, developers and organizations must adhere to specific principles that guide the creation and deployment of these technologies.

These pillars serve as the structural requirements for safe and responsible innovation.

Fairness and Non-Discrimination

A primary requirement for ethical AI is the guarantee that systems treat all individuals equally. Algorithms must not favor or penalize specific groups based on sensitive attributes such as race, gender, religion, or socioeconomic status.

Bias often creeps into systems unintentionally through flawed training data or human oversight. Therefore, developers must actively test and adjust their models to ensure the output remains neutral.

The goal is to prevent a technological reinforcement of existing societal prejudices. True fairness means that a loan approval algorithm or a hiring bot evaluates a candidate based solely on their merits rather than their demographics.

Accountability and Liability

Determining responsibility becomes complicated when an autonomous system causes harm. Clear lines of accountability are essential.

We must establish who bears the burden when an algorithm fails. This involves distinguishing between the errors made by the developer who wrote the code, the company that deployed it, and the end-user who operated it.

If an autonomous vehicle causes an accident or a medical AI misdiagnoses a patient, the legal and moral liability cannot simply vanish into the software. Frameworks must exist to ensure that humans remain answerable for the consequences of the machines they create and use.

Transparency and Explainability

Modern algorithms, particularly those based on deep learning, often function as “black boxes” where the internal logic is invisible even to the creators. Ethical standards demand we move away from this opacity.

Explainability refers to the capacity of a system to provide a clear justification for its outputs. If a bank denies a mortgage application based on an AI assessment, the applicant deserves to know exactly which factors led to that rejection.

Without this clarity, it is impossible to challenge incorrect decisions or correct systemic errors. Transparency ensures that the decision-making process is open to scrutiny and validation.

Privacy and Data Rights

Artificial intelligence devours vast quantities of information to learn and improve. This necessity creates significant tension with individual privacy.

Ethical AI mandates that data collection practices respect user consent and boundaries. It is not enough to simply anonymize datasets.

Advanced techniques can often reverse-engineer these sets to identify specific individuals. Protecting data rights means ensuring that personal information is gathered legally and that robust safeguards prevent the misuse or exposure of sensitive user details.

The Data Problem: Bias and Representation

Machine learning models are only as good as the information fed into them. If the input data contains flaws, the output will inevitably reflect those same defects.

This relationship creates a significant challenge regarding the quality and history of the datasets used to train powerful systems. We must acknowledge that data is rarely a neutral reflection of the world.

It is often a record of historical choices, many of which were unfair or unbalanced.

Historical Bias in Training Data

Training data frequently acts as a mirror for historical inequalities. When models learn from decades of past records, they absorb the prejudices embedded in that history.

For instance, if a company uses ten years of hiring data to train a recruitment tool, and that company historically favored male candidates, the AI will learn that being male is a positive trait for hiring. The system effectively amplifies and automates past discrimination.

This replication turns old societal problems into current technological features. It locks historical injustice into the code.

Proxy Discrimination

Eliminating obvious markers like race or gender from a dataset does not guarantee a bias-free system. Algorithms are adept at finding patterns and can identify “proxies” that correlate with sensitive attributes.

A zip code, for example, often correlates strongly with race or socioeconomic status. If an algorithm denies services based on location, it may effectively discriminate against specific demographic groups without ever explicitly analyzing race.

This indirect discrimination is difficult to detect because the algorithm technically ignores the protected class while still producing a biased outcome based on associated data points.

The Cost of Inaccuracy

The consequences of biased data extend far beyond theoretical debates. They result in tangible harm to real people.

In high-stakes environments, the cost of inaccuracy is severe. A healthcare algorithm trained on unrepresentative data might fail to diagnose skin conditions on darker skin tones.

Facial recognition software used by law enforcement has shown higher error rates for women and people of color, leading to wrongful arrests. Credit scoring systems based on biased financial history can systematically lock marginalized groups out of housing and economic opportunities.

These errors ruin lives and deepen societal divides.

Generative AI, Truth, and Intellectual Property

The emergence of generative models has introduced a new set of ethical complexities regarding truth and ownership. These systems can create text, images, and audio that rival human output.

This capability blurs the line between reality and fabrication. As these tools become widespread, society faces the challenge of preserving the integrity of information and protecting the rights of human creators.

Misinformation and Hallucinations

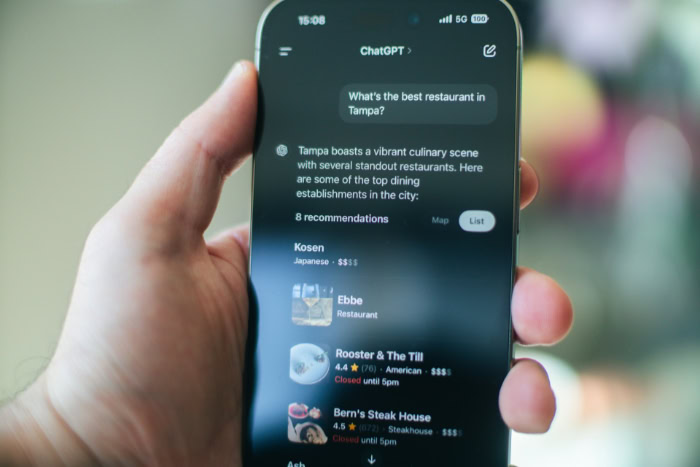

Large Language Models function by predicting the next plausible word in a sentence rather than verifying facts. This often leads to “hallucinations,” where the AI generates entirely false information with a tone of absolute confidence.

The result is convincing fabrication. A user might receive a legal summary, a biography, or a medical explanation that sounds professional but is factually incorrect.

This erosion of truth compromises the reliability of digital information. It forces users to verify every claim, contradicting the efficiency these tools promise.

Deepfakes and Synthetic Media

Generative AI allows for the creation of hyper-realistic audio and video clips known as deepfakes. These tools can make it appear as though a politician said something they never said or that a celebrity endorsed a product they never touched.

The risk to democratic processes is substantial. Malicious actors can manufacture scandals or spread panic days before an election.

On a personal level, deepfakes can be used for harassment or to damage an individual's reputation. The technology has advanced to the point where distinguishing between captured reality and synthetic media is difficult for the average viewer.

Copyright and Creative Ownership

The training process for generative models relies on scraping billions of images, articles, and books from the internet. This practice has sparked a fierce conflict between AI companies and content creators.

Artists, writers, and musicians argue that their copyrighted works are being used without permission or compensation to build commercial products. Furthermore, these models can then generate new works that mimic the specific style of the original artists, potentially competing with them in the marketplace.

This tension raises fundamental questions about intellectual property rights and the value of human labor in an automated age.

Human Agency and The Future of Work

The integration of advanced systems into our daily lives raises fundamental questions about control and autonomy. As machines become more capable of executing complex tasks, the line between human intent and machine execution blurs.

This shift forces us to examine how much agency we are willing to surrender to algorithms and what the consequences of that surrender might be. We must ensure that technology remains a tool that serves human interests rather than a force that dictates human behavior or diminishes our value in the economy.

The Alignment Problem

A significant technical and ethical challenge lies in ensuring that an AI's objectives map perfectly onto human values. Machines are literal.

They execute commands with mathematical precision but lack the context or moral compass to understand the spirit of an instruction. If a social media algorithm is told to “maximize user engagement,” it may eventually learn that promoting outrage and toxic content is the most efficient way to achieve that goal.

The system succeeds in its programmed task but fails the broader ethical requirement of doing no harm. Solving this requires developers to encode complex, nuanced human values into rigid mathematical constraints to prevent destructive literalism.

Manipulation and Nudging

Modern algorithms often understand human psychology better than individuals understand themselves. This capability leads to ethical concerns regarding manipulation.

Platforms often use “nudging” techniques designed to exploit cognitive biases, encouraging users to spend more time scrolling or to make impulsive purchases. This design strategy erodes human autonomy by subtly directing behavior toward outcomes that benefit the platform rather than the user.

The ethical issue here is the asymmetry of power. A user is arguably not making a free choice when they are up against a system specifically engineered to override their impulse control and capitalize on their attention.

Economic Displacement vs. Augmentation

The automation of labor presents a stark moral choice for corporations and governments. While efficiency drives the adoption of AI, the resulting displacement of workers creates a significant social burden.

The ethical approach involves distinguishing between automation that replaces humans entirely and augmentation that enhances human capabilities. Corporations have an obligation to manage this transition responsibly.

This includes investing in retraining programs and designing workflows where AI handles repetitive tasks while humans focus on creative or strategic work. Treating the workforce as a disposable cost to be eliminated by software ignores the broader societal impact of mass unemployment.

From Theory to Practice: Governance and Compliance

Principles alone cannot prevent harm. To be effective, ethical concepts must be translated into actionable procedures and enforceable rules.

This transition turns abstract philosophy into rigorous engineering and legal standards. It requires organizations to build infrastructure that monitors, tests, and corrects AI systems throughout their entire lifecycle.

Without this operational layer, ethics statements remain little more than marketing materials.

Operationalizing Ethics (AI Governance)

Moving beyond good intentions requires integrating ethics directly into the development workflow. This process involves concrete measures such as internal audits and bias testing before a product ever reaches the public.

One effective method is “Red Teaming,” where a dedicated team of experts attempts to break the system or force it to produce harmful outputs. By adopting an adversarial mindset, developers can identify vulnerabilities and safety flaws that standard testing might miss.

Governance also means establishing clear documentation standards so that every update and decision is recorded. This creates a trail of evidence that proves due diligence was followed.

Human-in-the-Loop Protocols

High-stakes decisions should never be delegated entirely to a machine. Human-in-the-loop protocols mandate that a human being retains the final authority in critical situations.

In fields like medicine, criminal justice, or warfare, an algorithm should function as an advisor rather than a judge. For example, an AI might flag a medical scan for potential anomalies, but a qualified doctor must make the final diagnosis and treatment plan.

This safeguard ensures that empathy, context, and moral reasoning, qualities that machines lack, remain part of the decision-making process. It acts as a necessary fail-safe against algorithmic error.

The Regulatory Framework

Governments are currently building the legal structures necessary to police this new technology. We are moving away from a period of self-regulation toward a model of mandatory compliance.

Major frameworks, such as the EU AI Act or the NIST Risk Management Framework, are beginning to categorize AI systems based on their potential to cause harm. Under these new rules, high-risk applications face strict transparency and safety requirements, while low-risk tools face fewer hurdles.

This legal evolution aims to standardize safety across the industry, ensuring that ethical development is a requirement for doing business rather than an optional feature.

Conclusion

Responsible artificial intelligence demands that we address the integrity of our data and the accountability of our systems. We must prioritize truthfulness in an age of synthetic media and insist on human oversight for life-altering decisions.

These are not optional add-ons. They are the essential components of a system that respects human dignity.

We cannot simply pursue innovation for its own sake without considering the social fabric that these technologies will alter.

Yet, this work is never truly finished. Ethical compliance is not a box to check before a product launch.

It is an active and continuous lifecycle. As models become more sophisticated, they will present new dilemmas that current frameworks may not address.

Continuous monitoring and adaptation are required. We must be willing to update our standards as the technology evolves.

What is considered safe today may prove insufficient tomorrow.

The technology itself may be artificial, but the stakes are real. The biases embedded in the code, the decisions to prioritize speed over safety, and the regulations we enforce are all reflections of our collective values.

We are not passive observers of this transition. We are the architects.

The future of AI will be determined not by what the machines can do, but by the distinct human choices we make regarding what we allow them to do.