What Is Memory Bandwidth? Know the Essentials

Think of the split-second decisions in a fast-paced video game, the intense calculations behind AI breakthroughs, or the sheer volume of data processed in scientific supercomputers. None of these feats would be possible without rapid, seamless communication between a processor and its memory.

This critical exchange is what’s known as memory bandwidth: the blazing-fast highway that determines how quickly data can travel to and from memory chips. As modern applications push the boundaries of performance, memory bandwidth often emerges as a deciding factor in achieving smooth gameplay, efficient AI training, and groundbreaking scientific discoveries.

Core Concepts

A system’s memory bandwidth often sets the upper limit for how much data can be moved between memory and the central processing units, whether those are CPUs or GPUs. The faster this transfer occurs, the more effective a device becomes at handling complex workloads, from high-definition gaming to deep learning applications.

Memory bandwidth, therefore, serves as a crucial foundation for the overall speed and efficiency of modern computing systems. Grasping its underlying concepts helps make sense of why certain upgrades and hardware choices can dramatically affect real-world performance.

Definition and Measurement

Memory bandwidth refers to the rate at which data can flow between memory chips and processing units within a computer. Simply put, it tells us how much information the system can move every second, usually measured in gigabytes per second (GB/s).

To quantify memory bandwidth, a straightforward formula brings together three main elements: clock speed, bus width, and the number of memory channels available.

The formula looks like this:

$$ \text{Bandwidth} = \text{Clock Speed} \times \text{Bus Width} \times \text{Channels} $$.

Each part represents a dimension of speed and capacity within the memory system. The result reveals the theoretical maximum amount of data that can be transferred each second, acting as a reliable metric for comparing different memory setups.

Modern computers often deal with massive files and intricate computational tasks. Sufficient memory bandwidth ensures that processors are not left waiting for data, keeping everything running smoothly, especially under heavy loads.

Key Components

Three core factors determine how much memory bandwidth a system can deliver: bus width, clock speed, and the number of memory channels.

Bus width refers to how much data can be carried at once through the memory’s data path. Wider buses, such as 128-bit or 256-bit configurations, allow more data to flow with each clock cycle, similar to expanding a highway to let more cars pass side by side.

Clock speed, measured in megahertz (MHz), sets the pace at which data moves back and forth between memory and processor. Faster clock speeds mean more data can be transferred every second, acting like a conveyor belt that moves more quickly as its speed increases.

Memory channels add yet another layer of efficiency. Single-channel systems handle all memory transfers through a single data path, while dual-channel or multi-channel configurations split the workload, allowing even more data to be transferred simultaneously.

This improvement reduces bottlenecks and enhances the system’s responsiveness, particularly in demanding applications like 3D rendering or artificial intelligence.

Each hardware generation brings increases in bus width, clock speed, or the number of channels available, helping to elevate memory bandwidth even further. By combining these components, modern systems achieve the necessary speed to match the growing demands of cutting-edge software and games.

Performance Implications and Bottlenecks

Memory bandwidth often acts as a silent yet powerful limit on how performant a system can be in demanding tasks. From deep learning frameworks to cutting-edge video games, the speed at which information can move between memory and processor frequently shapes the end-user experience and overall system efficiency.

When bandwidth fails to keep pace with the processor’s hunger for data, performance bottlenecks emerge, sometimes making even advanced hardware feel sluggish under load.

Bandwidth Limitations

Bandwidth limitations frequently surface during tasks that demand rapid and sustained data movement. In AI model training, large volumes of data and model weights must be shuffled continually between memory and the processor.

Limited bandwidth here leads to GPUs or CPUs idling as they wait for critical information, dragging down training speeds and extending project timelines. For AI practitioners, even small improvements in bandwidth can cut hours or days off their workflows, especially as neural networks and datasets grow larger.

Gaming experiences also suffer when memory bandwidth becomes a bottleneck. Modern titles, particularly those rendered in 4K or with expansive open-world environments, often rely on streaming high-resolution textures and detailed assets in real time.

When the memory subsystem cannot supply this data quickly enough, players may notice texture pop-ins, stuttering, or unexpected loading delays. Smooth, immersive gaming depends on the system’s ability to serve up data as fast as the graphics hardware can process it.

Workload-Specific Challenges

Some tasks put unique pressure on memory bandwidth due to the nature or scale of their operations. GPU-bound workloads, such as 3D rendering or complex simulations, require continuous feeding of scene data, textures, and compute instructions.

If the bandwidth cannot match the GPU’s processing capabilities, rendering times increase, and interactive experiences lose their fluidity. Artists, animators, and engineers working with real-time graphics engines often feel the impact directly in their work.

Multi-GPU setups, often used in scientific computing or high-end gaming rigs, present another kind of complexity. When tasks are split across multiple GPUs, memory bandwidth must support synchronized data access and transfer between cards.

Insufficient bandwidth or poor synchronization results in inconsistent performance, data bottlenecks, or even errors in distributed workloads. The need for fast, reliable data movement grows with each added GPU, making high-bandwidth memory and optimized interconnects critical for scaling up performance.

Overall, performance bottlenecks rooted in memory bandwidth restrictions underscore the need for careful hardware matching and workload tuning. More bandwidth not only unlocks better frame rates and training speeds but also enables entirely new classes of applications that were previously constrained by slow data access.

Technical Comparisons

Selecting the right type of memory can dramatically influence system performance, especially in demanding applications like AI training, gaming, and scientific computing. Two major contenders in the memory space are High Bandwidth Memory (HBM) and more traditional options such as DDR and GDDR.

Each brings unique strengths and weaknesses to the table, and their design differences directly affect bandwidth, latency, and overall cost.

HBM Architecture

High Bandwidth Memory represents a significant leap in memory technology. Unlike conventional memory chips lined up horizontally on a circuit board, HBM employs a 3D-stacked approach.

Memory cells are layered vertically, creating a compact, high-density package that occupies far less space than traditional designs. This stacking of memory layers allows for much greater capacity in a smaller footprint, making HBM especially attractive for GPUs and accelerators that demand both power and efficiency without compromising board space.

A major enabler of HBM’s impressive performance is Through-Silicon Via (TSV) technology. TSVs are microscopic vertical connections that pass directly through silicon layers, linking stacked chips together at high speed.

This direct connection reduces the distance data must travel, slashing latency and supporting staggering bandwidth figures. With data flowing through these vertical channels, HBM achieves both higher speeds and lower power consumption compared to the sprawling traces found in older memory technologies.

Performance Trade-Offs

Deciding between HBM and traditional memory like DDR or GDDR often comes down to balancing bandwidth, latency, and cost. HBM delivers bandwidth in the range of 1 to 2 terabytes per second, far outpacing the 50 to 100 gigabytes per second typical of GDDR or DDR setups.

Lower latency further enhances its appeal, ensuring data is not only abundant but also arrives with minimal delay. For applications where rapid and massive data exchange is vital, such as AI model training or professional rendering, HBM provides a clear advantage.

The story shifts, however, when considering cost and accessibility. HBM’s sophisticated manufacturing process and advanced integration drive up its price, making it less common in everyday consumer devices.

DDR and GDDR, on the other hand, offer a more budget-friendly solution, delivering sufficient bandwidth for mainstream gaming and general-purpose computing while keeping system costs manageable.

| Feature | HBM | DDR/GDDR |

| Bandwidth | 1-2 TB/s | 50-100 GB/s |

| Latency | Lower | Higher |

| Cost | Higher | Lower |

Both memory types continue to evolve, but their distinct characteristics mean each is suited to specific roles within the broader computing ecosystem. The ongoing push for more capable AI, faster rendering, and larger datasets ensures both HBM and traditional memory technologies play vital, if very different, parts in modern performance computing.

Optimization Strategies

Maximizing memory bandwidth is often a blend of hardware upgrades and software refinements. Bottlenecks can occur when the speed of data movement cannot keep pace with the demands of CPUs, GPUs, or advanced algorithms.

Addressing these challenges requires a thoughtful combination of system-level changes and programming techniques designed to make every byte transfer count.

Hardware Solutions

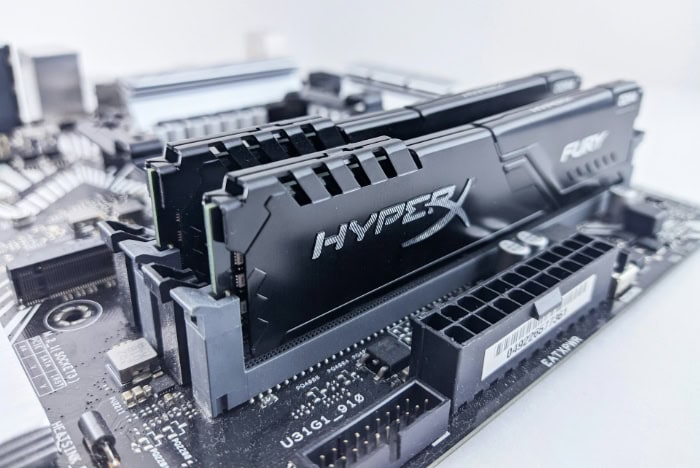

Hardware plays a foundational role in shaping memory bandwidth. Simple upgrades, like enabling dual-channel RAM, can double the amount of data moved between the processor and memory compared to single-channel operation.

Dual-channel setups allow for simultaneous data transfers, improving responsiveness in tasks from gaming to content creation.

For those working with intensive data workloads or AI training, choosing a GPU equipped with HBM, such as NVIDIA’s A100 or H100 series, provides a substantial leap in available bandwidth. The higher data transfer rates and greater efficiency offered by HBM-enabled GPUs eliminate many of the traditional bottlenecks encountered during large-scale model training or scientific workloads.

Investing in the right hardware lays a solid foundation, but realizing optimal bandwidth also requires attention to how software interacts with memory.

Software Approaches

Thoughtful coding practices and software-level adjustments help ensure that available bandwidth is used efficiently. Techniques like gradient accumulation allow developers to divide large machine learning models into smaller, more manageable batches.

By reducing the batch size, the strain on memory transfers goes down, letting systems operate closer to their real-world peak performance.

Model compression methods such as pruning or quantization also yield significant gains. Pruning removes unnecessary weights from a neural network, while quantization shrinks the numerical precision of stored values.

Both techniques lower the volume of data that must be transferred or processed, directly boosting effective bandwidth without changing the underlying hardware. Software choices often bring surprising improvements, especially when paired with modern memory-aware programming libraries.

Memory Management

Effective memory management is the final piece of the performance puzzle. Implementing layer-wise offloading strategies lets systems store less critical data on slower, less expensive forms of memory while keeping essential information in high-speed memory modules.

This approach minimizes traffic on the main memory bus, creating more capacity for time-sensitive operations.

Reducing redundant data transfers further streamlines performance. Careful analysis of memory access patterns helps programmers eliminate unnecessary or duplicate data movements, conserving both bandwidth and system resources.

Applications that regularly handle large datasets, such as scientific simulations or video processing, benefit greatly from memory-efficient strategies.

Applications and Industry Impact

Memory bandwidth does far more than shape isolated technical metrics; it serves as a driving force behind real-world innovations and end-user experiences in multiple industries. As workloads grow larger and more complex, the ability to move data quickly between memory and processor underpins progress in machine learning, entertainment, scientific computing, and beyond.

AI/ML Workloads

Modern artificial intelligence and machine learning applications rely on enormous data sets, intricate model architectures, and fast iteration cycles. Accelerated memory bandwidth leads directly to faster model training and inference, slashing the time required for fine-tuning or deploying state-of-the-art models.

Transformer-based architectures, renowned for their expansive parameter counts and appetite for data, push memory subsystems to their limits. Systems with high bandwidth ensure that massive neural networks operate smoothly, supporting everything from natural language processing to generative AI tools. Enhanced memory throughput not only reduces bottlenecks but also enables researchers and engineers to experiment with increasingly ambitious algorithms.

Gaming and Graphics

The gaming and graphics industries benefit tremendously from advances in memory bandwidth. High-speed data transfers allow for faster texture streaming, letting games load high-resolution assets and detailed environments with minimal delay.

Fluid gameplay, particularly in open-world or graphically rich titles, depends on delivering textures and geometry to the GPU with constant speed and reliability. Direct improvements in bandwidth often translate to higher frame rates and reduced stutter, especially in scenarios where the GPU is the main performance limiter.

Streamers, professional gamers, and everyday players alike notice the difference that seamless memory access makes in the quality of interactive experiences.

HPC and Scientific Computing

High-performance computing (HPC) and scientific simulations place extreme demands on system memory. Many scientific workloads, such as physics simulations or genomic analysis, are often constrained not by processing power, but by how quickly data can be fed to computation engines.

Higher memory bandwidth helps mitigate the so-called “memory wall,” where computational speed exceeds the memory system’s ability to supply data. This improvement supports more efficient parallel processing, letting thousands of cores or GPUs collaborate on massive problems without unnecessary waiting.

Enhanced bandwidth promotes greater scalability and accuracy in research, letting scientists tackle simulations and calculations that were once out of reach.

Conclusion

Memory bandwidth stands out as a fundamental metric shaping the speed and responsiveness of today’s most demanding computing tasks. From AI training to immersive gaming and scientific discovery, the ability to move vast amounts of data quickly can make the difference between cutting-edge performance and frustrating slowdowns.

Achieving top-tier results requires more than just powerful hardware; carefully chosen upgrades work best when paired with smart software strategies and thoughtful memory management. As next-generation artificial intelligence, graphics, and scientific applications continue to grow in complexity, memory bandwidth will remain at the forefront of performance innovation, ensuring that processors and accelerators deliver on their full potential.

The future of breakthroughs in AI and high-performance computing will be defined not only by how fast processors can think, but also by how quickly they can access and process the data that fuels progress.