What Is Load Balancing? The Key to Handling Traffic Surges

Modern networks face constant pressure to deliver fast, reliable, and seamless experiences, especially as businesses and applications handle ever-growing demands. Traffic surges, unpredictable user patterns, and server downtime can threaten performance and customer satisfaction.

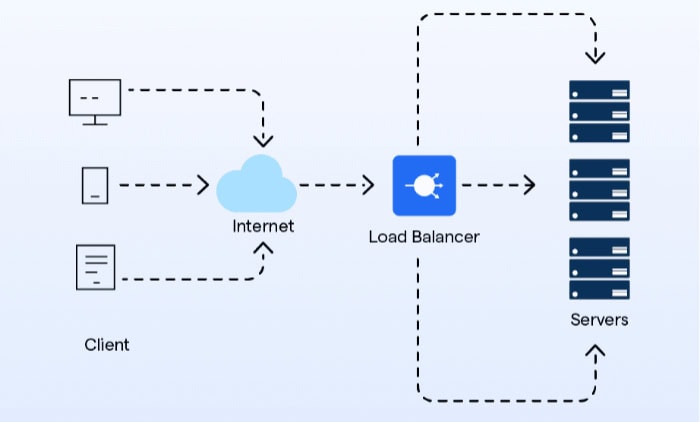

Enter load balancing, a powerful technique designed to share the workload across multiple servers, ensuring no single server bears the brunt of the demand.

Definition and Core Purpose

Load balancing is a critical method used to distribute incoming network traffic across multiple servers. Its purpose is simple: to prevent any single server from becoming overwhelmed and to ensure that resources are used efficiently.

This process is vital for maintaining the speed, reliability, and resilience of modern applications, particularly in scenarios where millions of users may be accessing the same service simultaneously. Whether it’s a popular e-commerce website during a holiday sale or a streaming platform during a live event, load balancing is what keeps things running smoothly.

Problem It Solves

When multiple users or devices try to access a server at the same time, it can lead to server overload. This not only results in slower response times but can also cause crashes or complete unavailability of services.

These situations are especially common during sudden spikes in traffic, such as ticket sales for a major concert or a viral social media campaign.

Another issue is latency, which occurs when servers cannot efficiently handle requests in a timely manner. For users, this means slower website loading times, buffering during video streams, or even failed transactions.

Furthermore, a lack of distribution in traffic management makes systems vulnerable to single points of failure. If one server goes down without a backup plan, the entire service could become inaccessible.

In short, without load balancing, organizations face risks ranging from poor user experiences to financial losses due to downtime. These risks become even more pronounced as digital services grow and the demand for 24/7 availability increases.

Key Objectives

The primary function of load balancing is to ensure that traffic is handled in a way that maintains optimal system performance. One of its main goals is to maximize throughput, enabling servers to process as many requests as possible without delays or interruptions. This directly translates to smoother services and happier users.

Another important objective is to minimize response time. By efficiently routing requests to the least congested servers, load balancing reduces the time it takes for users to receive the information or service they requested.

This is particularly vital for industries like finance or healthcare, where speed can have significant consequences.

Fault tolerance is another critical goal. Load balancers can detect when a particular server is unresponsive or functioning poorly, automatically redirecting traffic to other healthy servers.

This helps maintain uptime and reduces the likelihood of complete service outages.

Finally, load balancing ensures that resources such as CPU, memory, and bandwidth are utilized evenly across all available servers. This prevents any single server from being overburdened while others remain underutilized.

By maintaining this equilibrium, organizations can make the most of their infrastructure and avoid unnecessary costs.

How Load Balancing Works

Load balancing often operates behind the scenes, yet it’s essential for ensuring that services can scale, remain reliable, and handle demands efficiently. At its core, load balancing distributes incoming traffic across multiple servers based on predefined strategies and rules.

To achieve this, load balancers can be implemented in various forms and rely on intelligent algorithms to manage traffic flow. This ensures that applications remain responsive and resilient, even during high demand or server failures.

Types of Load Balancers

Load balancers are not one-size-fits-all solutions. They come in different forms, each offering unique advantages based on the infrastructure and business needs.

The three main types are hardware-based, software-based, and cloud-based load balancers.

Hardware load balancers are physical devices installed within data centers. They are known for their high performance and reliability but come with significant upfront costs.

These solutions often require specialized expertise to set up and maintain, making them more suitable for larger enterprises with high-traffic workloads and robust infrastructure.

On the other hand, software load balancers are more versatile and cost-effective. These can be deployed on virtual machines or standard servers, offering flexibility for businesses of all sizes.

Software solutions are especially useful for environments that need frequent updates or custom configurations, as they are easier to modify and scale.

Cloud-based load balancers, offered by providers like AWS, Google Cloud, and Azure, have become increasingly popular due to their scalability and ease of deployment. These solutions are hosted in the cloud and integrate seamlessly with other cloud services, making them ideal for organizations that prioritize agility and pay-as-you-go pricing models.

Load balancers also differ in how they manage traffic. Application-level load balancers (Layer 7) operate on the application layer of the OSI model.

These balancers make decisions based on application-specific data, such as HTTP headers, URLs, and cookies, allowing for advanced routing capabilities. In contrast, network-level load balancers (Layer 4) function at a lower level, routing traffic based on network information like IP addresses and TCP/UDP ports.

While Layer 4 balancers are faster and simpler, Layer 7 balancers provide greater flexibility and control.

Common Algorithms

Once a load balancer receives incoming traffic, it needs a way to decide which server will handle each request. This decision-making process is guided by algorithms designed to efficiently distribute workloads and maintain system performance.

The round-robin algorithm is one of the simplest methods, where traffic is distributed evenly across all servers in a rotating sequence. This approach works well for systems where all servers have similar processing capabilities, ensuring that each server gets an equal share of the load.

The least connections algorithm assigns traffic to the server with the fewest active connections. This approach is particularly effective in scenarios where workloads vary, as it ensures that servers handling more intensive tasks are not burdened further.

Weighted distribution introduces another layer of complexity by assigning different weights to servers based on their capacity. For example, a powerful server may be given a higher weight, allowing it to handle a proportionally larger share of the traffic compared to less capable servers.

This method ensures that resources are utilized efficiently.

Session persistence, often referred to as “sticky sessions,” is another important algorithm used in load balancing. This technique ensures that requests from the same user are consistently directed to the same server.

Session persistence is particularly useful for applications that rely on user-specific data, such as shopping carts or authentication systems, as it maintains continuity and avoids disruptions.

With these algorithms in place, load balancers dynamically allocate traffic to servers in a way that optimizes performance and maintains a seamless user experience. Each algorithm has its strengths, and the choice ultimately depends on the specific requirements of the application and infrastructure.

Benefits of Load Balancing

Load balancing is not just about distributing traffic; it’s also a crucial mechanism for improving performance, reliability, and scalability across an organization’s infrastructure. By efficiently allocating resources and ensuring systems remain responsive under pressure, load balancing provides a solid foundation for businesses aiming to deliver consistent and enjoyable user experiences.

Its benefits extend beyond managing traffic to support long-term growth and resilience, even in the face of unexpected challenges.

Scalability and Flexibility

Modern applications often experience fluctuating traffic, from normal daily activity to sudden surges brought on by special events or promotions. Load balancing makes it possible to scale horizontally to accommodate this growth by adding more servers to the infrastructure.

This expansion allows businesses to meet demand without overburdening existing resources, ensuring that performance remains smooth, even during periods of peak activity.

Cloud-native environments take this flexibility further by offering dynamic solutions, such as auto-scaling groups. These systems automatically adjust server capacity based on real-time traffic patterns, adding servers when demand spikes and downsizing during quieter periods to minimize costs.

This adaptability is particularly advantageous for businesses that serve global user bases, as cloud-based scaling ensures capacity is available where and when it’s needed most.

By leveraging these capabilities, load balancing gives organizations the tools to grow and adapt without sacrificing application performance or reliability, even in rapidly changing environments.

Enhanced Reliability

One of the most significant advantages of load balancing is the way it strengthens system reliability. Failover mechanisms are built into load balancers to protect against server failures.

When one server becomes unavailable, the load balancer automatically redirects traffic to other healthy servers, ensuring uninterrupted service for users. This redundancy eliminates single points of failure, which could otherwise disrupt operations entirely.

In addition to failover, load balancers use health checks to monitor the status of servers continuously. These checks identify issues like unresponsiveness or degraded performance and prevent traffic from being routed to problematic servers.

Instead, the load balancer reroutes requests to functional servers until the issue is resolved. This proactive approach minimizes downtime and keeps services reliable, even when unexpected technical problems arise.

Overall, load balancing helps create a more resilient infrastructure capable of withstanding failures and maintaining high availability, which is critical for businesses that depend on seamless, 24/7 operations.

Improved User Experience

Fast and reliable performance is the cornerstone of an excellent user experience, and load balancing plays a vital role in delivering it. By intelligently distributing traffic, load balancers reduce latency, ensuring that users receive quick responses to their requests.

This is particularly important in systems with geographically distributed servers, where load balancers can direct traffic to the server closest to the user for faster processing.

Load balancing also ensures consistent performance, even during high-traffic periods. Without it, users might experience delays, buffering, or failed transactions when servers become overwhelmed.

By evenly sharing the workload, load balancers prevent these bottlenecks and provide users with the smooth interactions they expect, regardless of how many others are accessing the system at the same time.

Challenges and Best Practices

While load balancing is a powerful tool for improving performance, reliability, and scalability, it is not without its challenges. Implementing and managing an effective load-balancing solution can involve complexities that must be addressed to ensure a smooth and efficient operation.

Additionally, proper strategies and practices are essential to mitigate risks and maximize the benefits of load balancing.

Common Challenges

One of the primary challenges of load balancing is the complexity involved in its configuration and ongoing maintenance. Setting up a load balancer requires precise routing rules, integration with underlying infrastructure, and alignment with application logic.

Even small misconfigurations can lead to inefficiencies, such as uneven traffic distribution or disruptions in user sessions. As the infrastructure grows more complex, maintaining load balancers can also become increasingly demanding, requiring specialized expertise.

Another issue is the potential for single points of failure within the load-balancing system itself. While load balancers are designed to eliminate single points of failure in server environments, they can become bottlenecks if their own reliability is not prioritized.

A malfunctioning or incorrectly configured load balancer could lead to traffic being delayed, misrouted, or completely blocked.

Furthermore, the dynamic nature of modern infrastructure, such as cloud environments and containerized applications, introduces additional challenges. Load balancers must be able to adapt to changes like new server instances or variations in traffic patterns without constant manual intervention.

Failing to address these complications may cause inefficiencies or service disruptions, limiting the effectiveness of the load-balancing strategy.

Mitigation Strategies

To address these challenges, organizations can adopt several best practices that enhance the reliability and efficiency of their load-balancing systems. One effective approach is implementing redundant load balancers.

By deploying an active/passive setup, where a secondary load balancer remains on standby, traffic can be seamlessly redirected if the primary load balancer encounters an issue. This redundancy ensures continuity and eliminates the risk of a total system failure caused by a single load balancer.

Automated monitoring and dynamic adjustments are also critical for overcoming common challenges. Monitoring tools allow administrators to track the health and performance of both servers and load balancers in real time.

When issues are detected, such as a failing server or an overloaded node, dynamic adjustments can automatically redistribute traffic or notify teams to resolve the problem. This proactive approach minimizes downtime and ensures systems remain responsive even when unexpected issues arise.

Another way to simplify the complexity of load balancing is by leveraging managed cloud services. Solutions like AWS Application Load Balancer (ALB) and NGINX provide built-in support for dynamic scaling, health checks, and traffic distribution, reducing the burden on IT teams.

These services often integrate seamlessly with other cloud-native tools, making them a practical choice for businesses that value agility and ease of use.

Conclusion

Load balancing plays an essential role in building fast, reliable, and scalable systems that can handle the demands of modern applications. By distributing traffic intelligently, it ensures that no single server becomes overwhelmed while enhancing flexibility, reliability, and user satisfaction.

From preventing downtime to improving response times, its benefits are far-reaching and critical to maintaining seamless operations.

While challenges like configuration complexity and potential single points of failure exist, adopting strategies such as redundancy, automated monitoring, and managed solutions can address these issues effectively. With the right implementation and approach, load balancing becomes a valuable asset in supporting growth, meeting user expectations, and ensuring the consistent delivery of high-quality services.